Robots

Description

In OmniGibson, Robots define agents that can interact with other objects in a given environment. Each robot can interact by deploying joint

commands via its set of Controllers, and can perceive its surroundings via its set of Sensors.

OmniGibson supports both navigation and manipulation robots, and allows for modular specification of individual controllers for controlling the different components of a given robot. For example, the Fetch robot is a mobile manipulator composed of a mobile (two-wheeled) base, two head joints, a trunk, seven arm joints, and two gripper finger joints. Fetch owns 4 controllers, one for controlling the base, the head, the trunk + arm, and the gripper. There are multiple options for each controller depending on the desired action space. For more information, check out our robot examples.

It is important to note that robots are full-fledged StatefulObjects, and thus leverage the same APIs as normal scene objects and can be treated as such. Robots can be thought of as StatefulObjects that additionally own controllers (robot.controllers) and sensors (robot.sensors).

Usage

Importing

Robots can be added to a given Environment instance by specifying them in the config that is passed to the environment constructor via the robots key. This is expected to be a list of dictionaries, where each dictionary specifies the desired configuration for a single robot to be created. For each dict, the type key is required and specifies the desired robot class, and global position and orientation (in (x,y,z,w) quaternion form) can also be specified. Additional keys can be specified and will be passed directly to the specific robot class constructor. An example of a robot configuration is shown below in .yaml form:

single_fetch_config_example.yaml

Runtime

Usually, actions are passed to robots and observations retrieved via the obs, info, terminated, truncated, done = env.step(action). However, actions can be directly deployed and observations retrieved from the robot using the following APIs:

-

Applying actions:

robot.apply_action(action)(1) -

Retrieving observations:

obs, info = robot.get_obs()(2)

actionis a 1D-numpy array. For more information, please see the Controller section!obsis a dict mapping observation name to observation data, andinfois a dict of relevant metadata about the observations. For more information, please see the Sensor section!

Controllers and sensors can be accessed directly via the controllers and sensors properties, respectively. And, like all objects in OmniGibson, common information such as joint data and object states can also be directly accessed from the robot class. Note that by default, control signals are updated and deployed every physics timestep via the robot's internal step() callback function. To disable controllers from automatically deploying control signals, set robot.control_enabled = False. This means that additional step() calls will not update the control signals sent, and so the most recent control signal will still be propagated until the user either re-enables control or manually sets the robot's joint positions / velocities / efforts.

Types

OmniGibson currently supports 12 robots, consisting of 4 mobile robots, 3 manipulation robots, 4 mobile manipulation robots, and 1 anthropomorphic "robot" (a bimanual agent proxy used for VR teleoperation). Below, we provide a brief overview of each model:

Mobile Robots

These are navigation-only robots (an instance of LocomotionRobot) that solely consist of a base that can move.

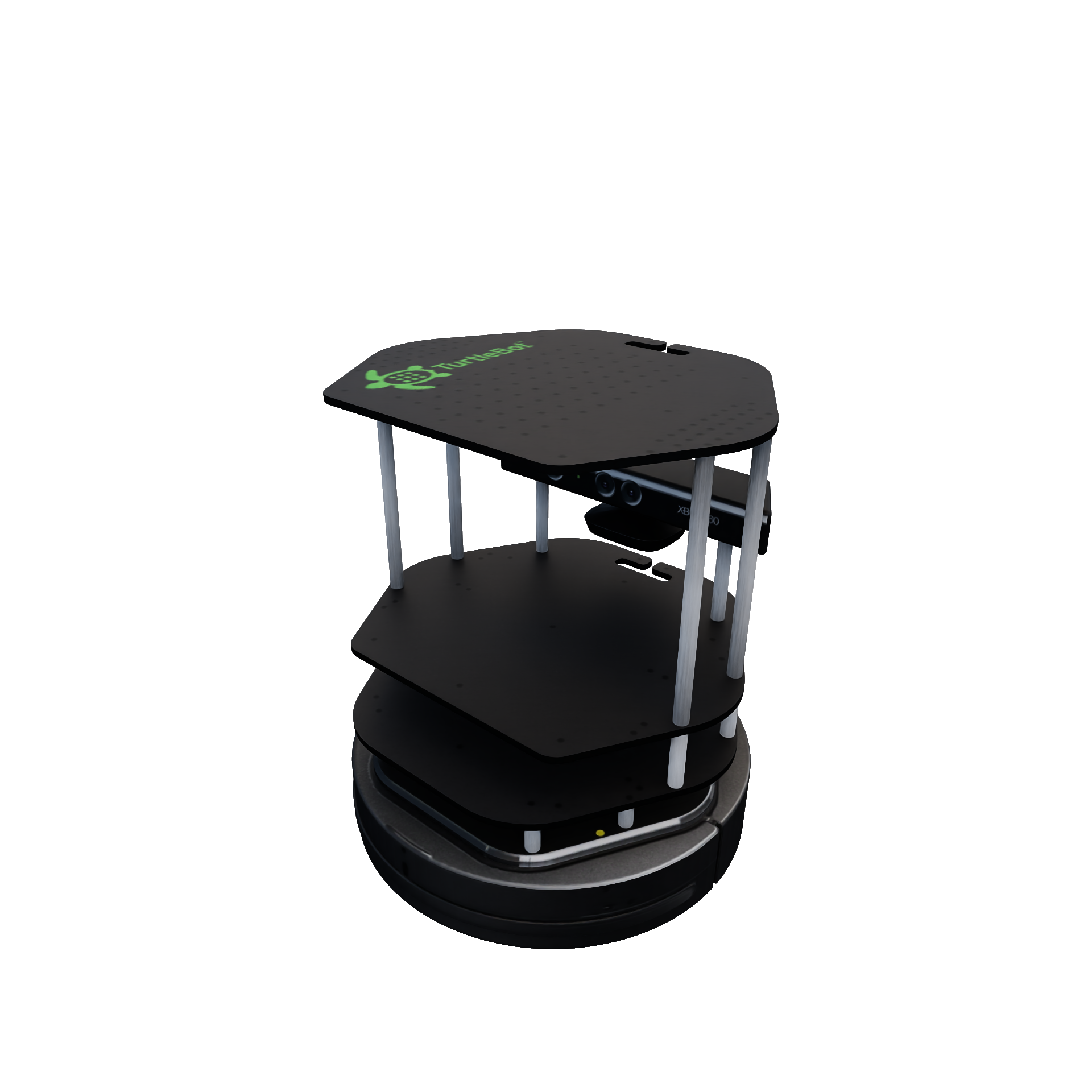

TurtlebotThe two-wheeled Turtlebot 2 model with the Kobuki base.

|

|

LocobotThe two-wheeled, open-source LoCoBot model. Note that in our model the arm is disabled and is fixed to the base.

|

|

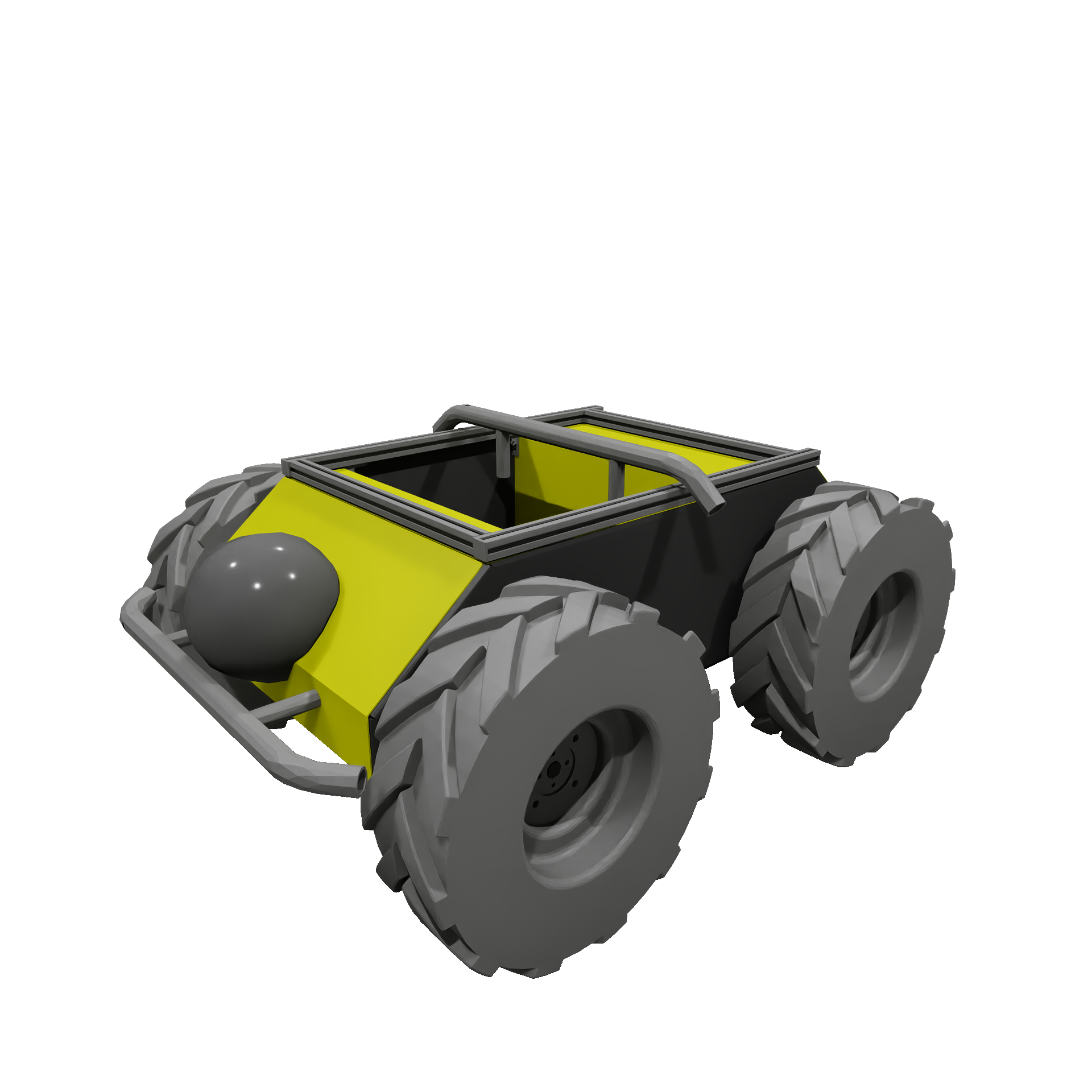

HuskyThe four-wheeled Husky UAV model from Clearpath Robotics.

|

|

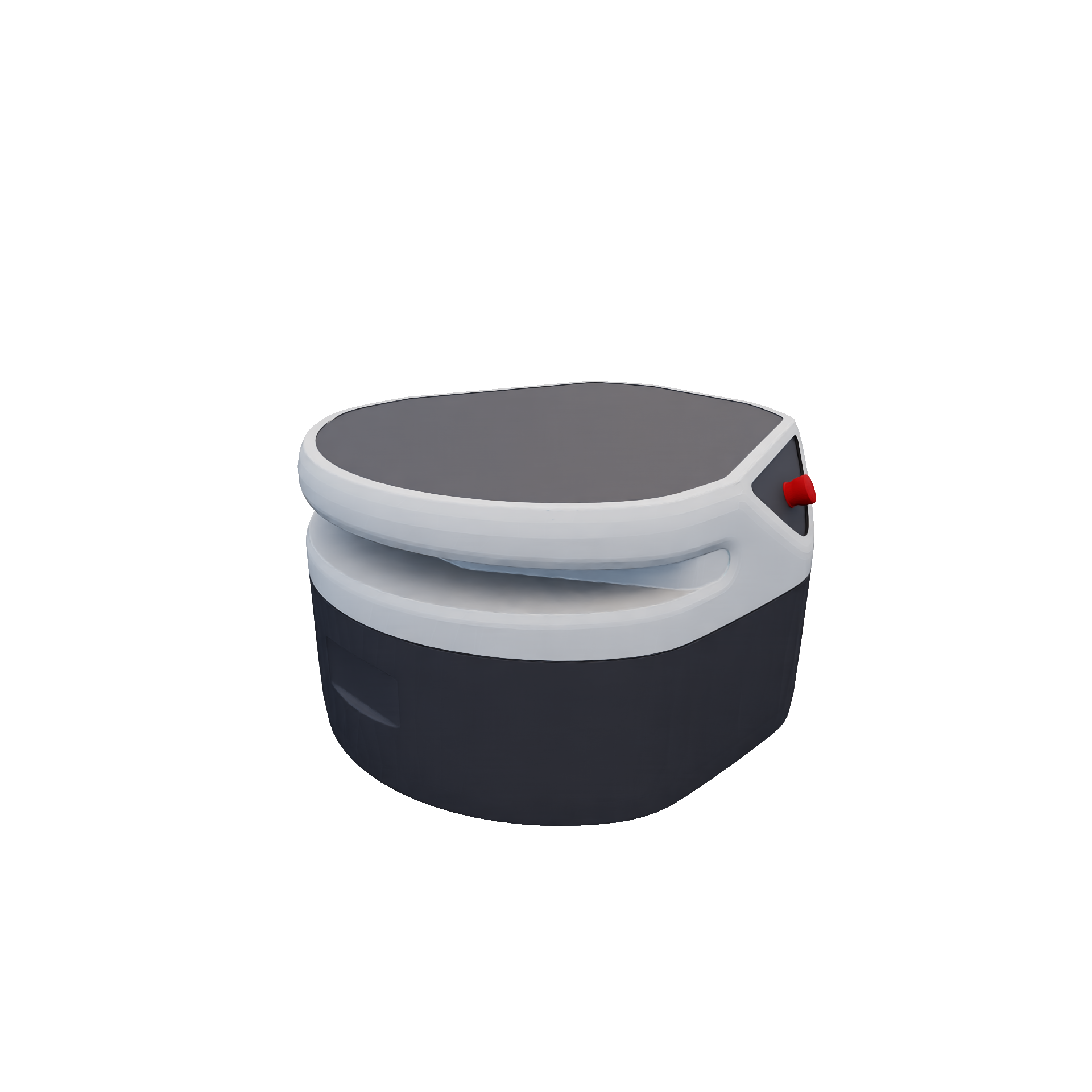

FreightThe two-wheeled Freight model which serves as the base for the Fetch robot.

|

|

Manipulation Robots

These are manipulation-only robots (an instance of ManipulationRobot) that cannot move and solely consist of an actuated arm with a gripper attached to its end effector.

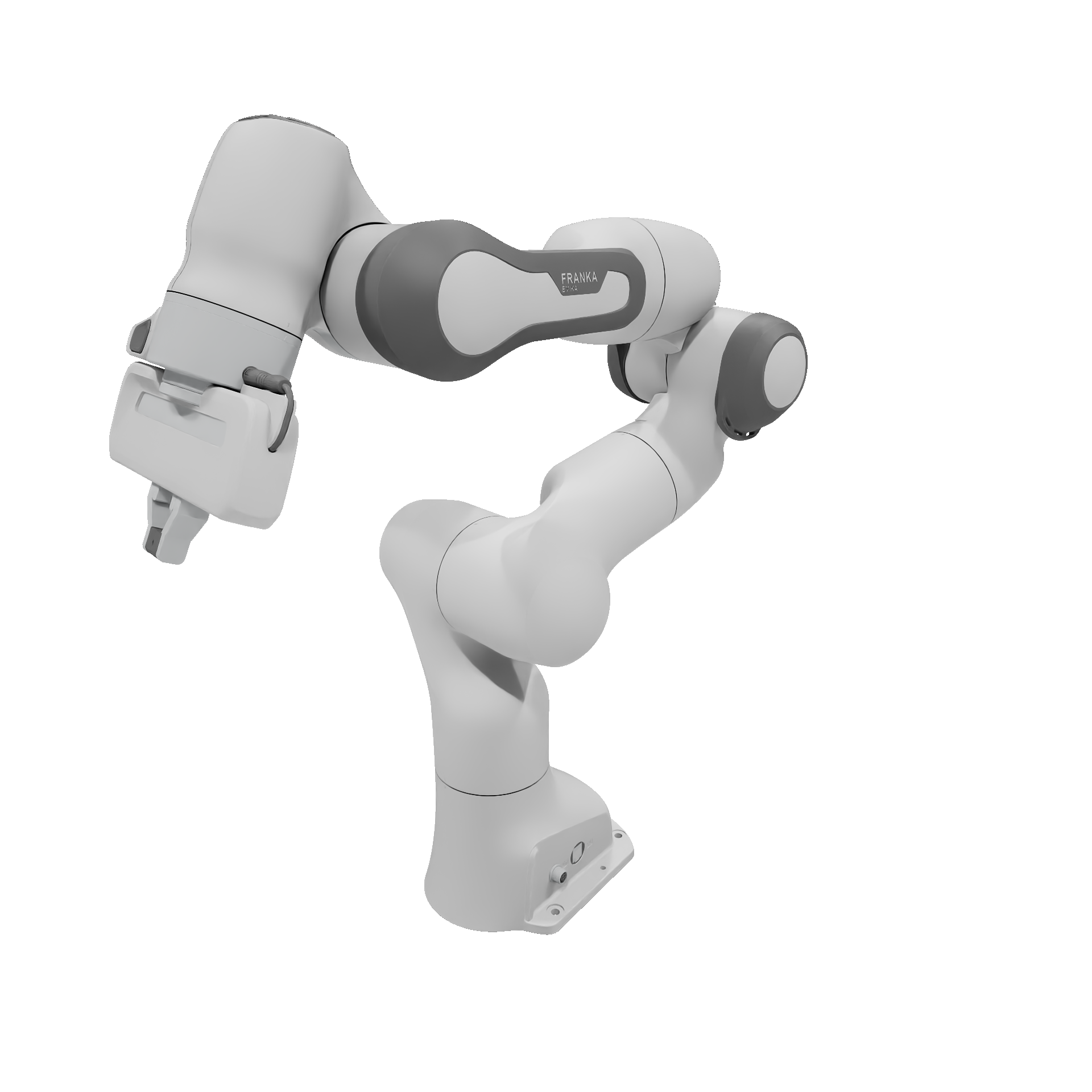

FrankaThe popular 7-DOF Franka Research 3 model equipped with a parallel jaw gripper. Note that OmniGibson also includes three alternative versions of Franka with dexterous hands: FrankaAllegro (equipped with an Allegro hand), FrankaLeap (equipped with a Leap hand) and FrankaInspire (equipped with an inspire hand).

|

|

VX300SThe 6-DOF ViperX 300 6DOF model from Trossen Robotics equipped with a parallel jaw gripper.

|

|

A1The 6-DOF A1 model equipped with a Inspire-Robots Dexterous Hand.

|

|

Franka MountedFranka mounted on an aluminum extrusion frame cart.

|

|

Mobile Manipulation Robots

These are robots that can both navigate and manipulate (and inherit from both LocomotionRobot and ManipulationRobot), and are equipped with both a base that can move as well as one or more gripper-equipped arms that can actuate.

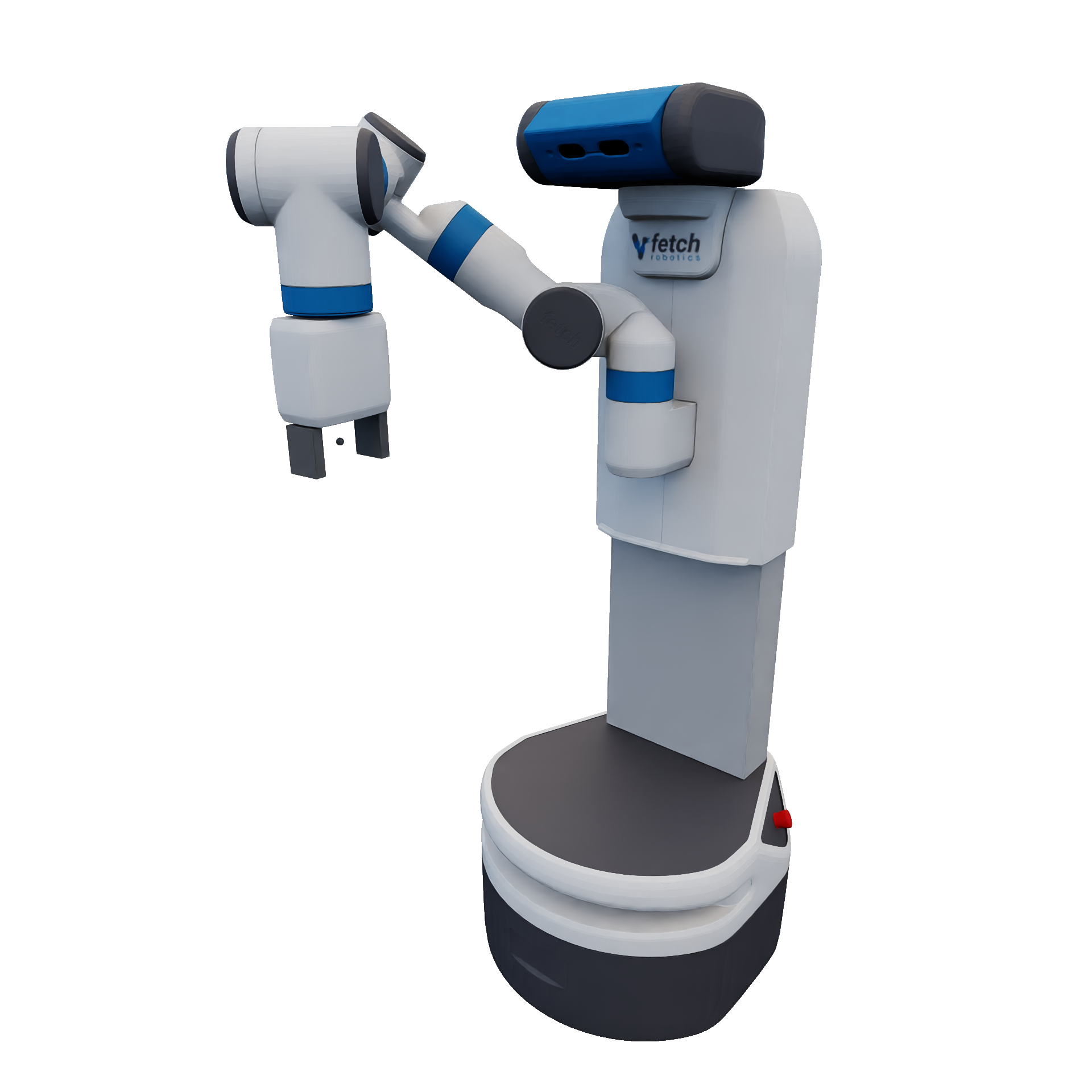

FetchThe Fetch model, composed of a two-wheeled base, linear trunk, 2-DOF head, 7-DOF arm, and 2-DOF parallel jaw gripper.

|

|

TiagoThe bimanual Tiago model from PAL robotics, composed of a holonomic base (which we model as a 3-DOF (x,y,rz) set of joints), linear trunk, 2-DOF head, x2 7-DOF arm, and x2 2-DOF parallel jaw grippers.

|

|

StretchThe Stretch model from Hello Robot, composed of a two-wheeled base, 2-DOF head, 5-DOF arm, and 1-DOF gripper.

|

|

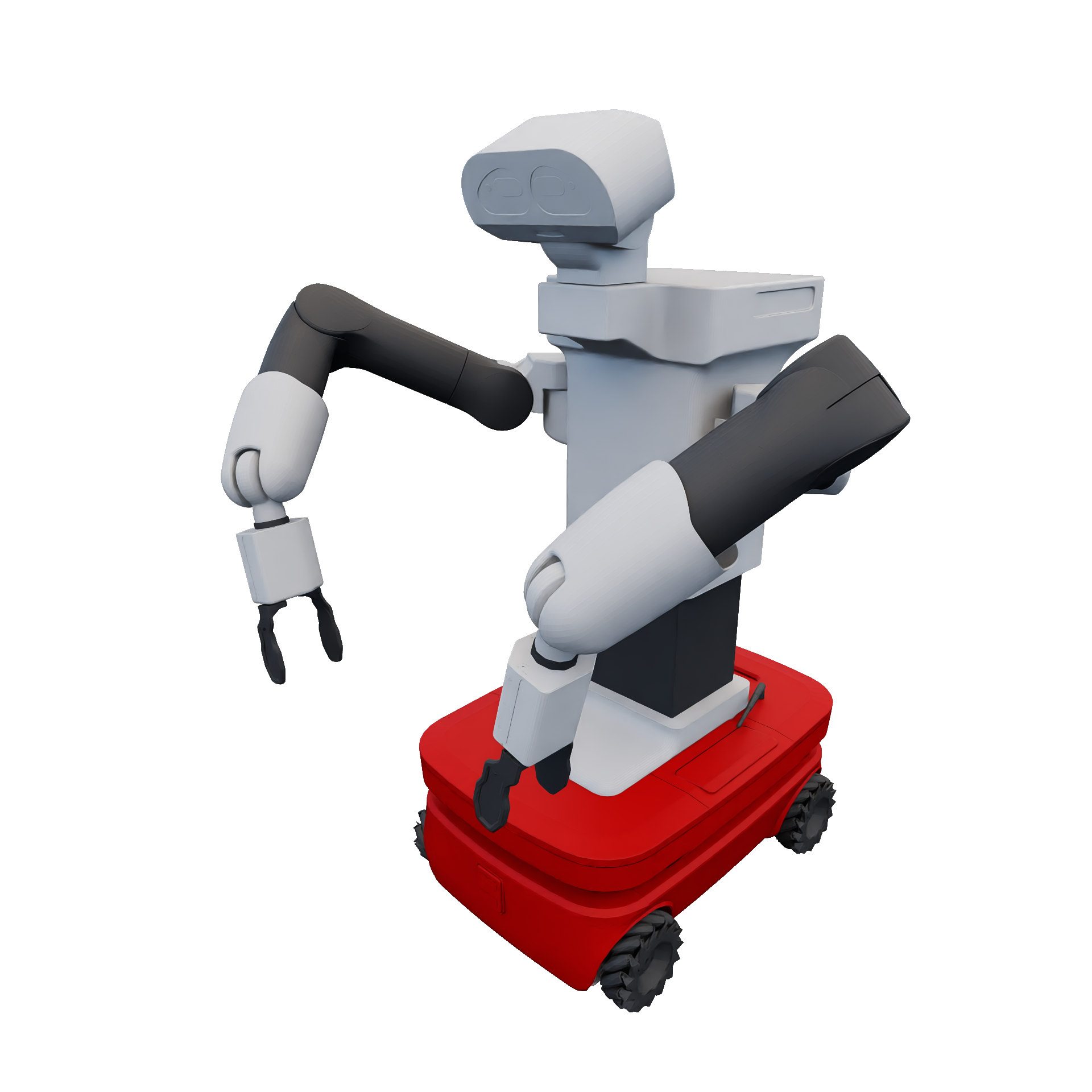

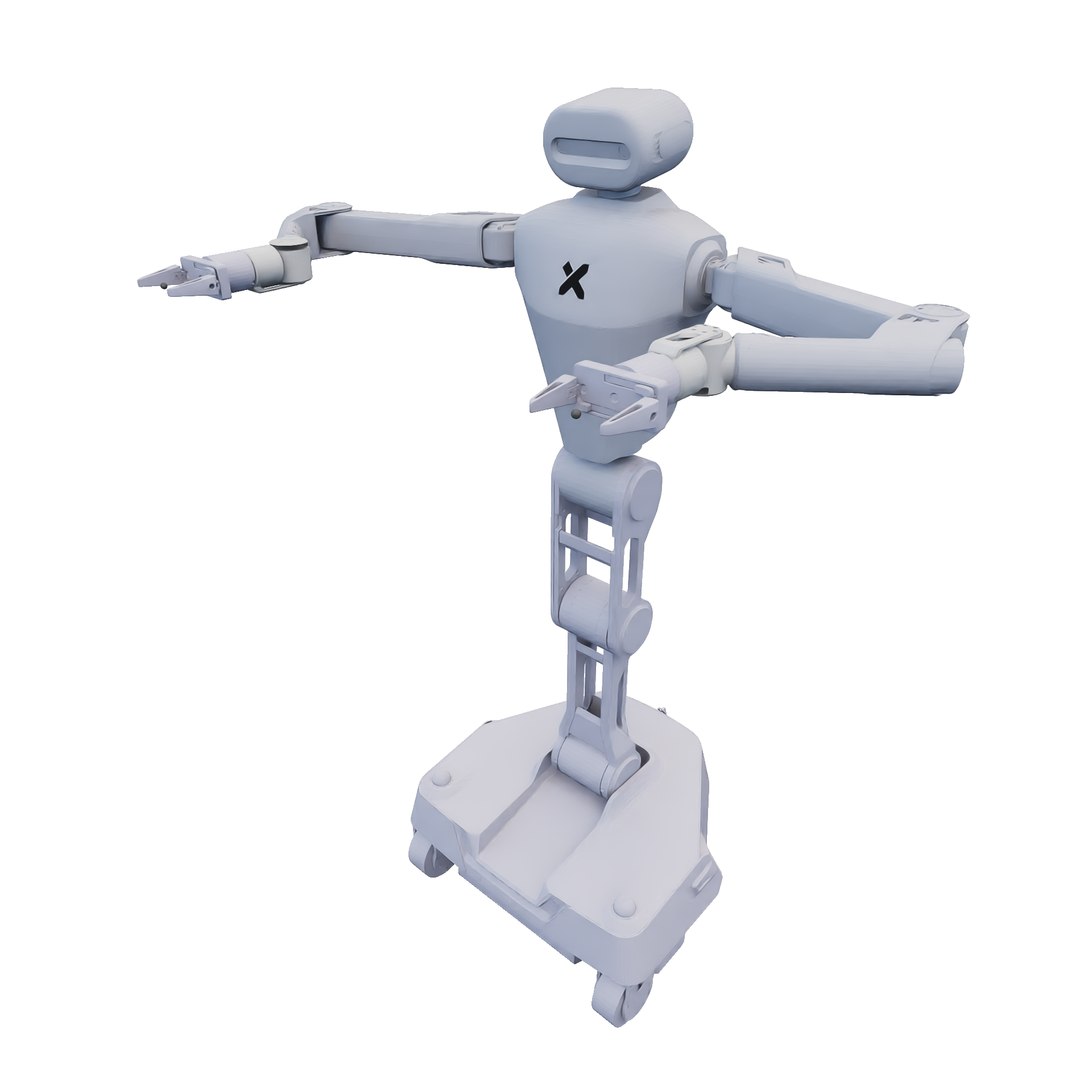

R1The bimanual R1 model, composed of a holonomic base (which we model as a 3-DOF (x,y,rz) set of joints), 4-DOF torso, x2 6-DOF arm, and x2 2-DOF parallel jaw grippers.

|

|

R1 ProThe bimanual R1Pro model, composed of a holonomic base (which we model as a 3-DOF (x,y,rz) set of joints), 4-DOF torso, x2 7-DOF arm, and x2 2-DOF parallel jaw grippers.

|

|

Additional Robots

BehaviorRobotA hand-designed model intended to be used exclusively for VR teleoperation.

|

|