BEHAVIOR includes all challenging aspects of robotics and embodied AI: perception, decision making and control: agents are have to perform 100 activities in household environments based on the information acquired by its onboard sensors using the interactions enabled by its physical body. Visit our documentation for more details.

Embodiments, Actuation, Sensing

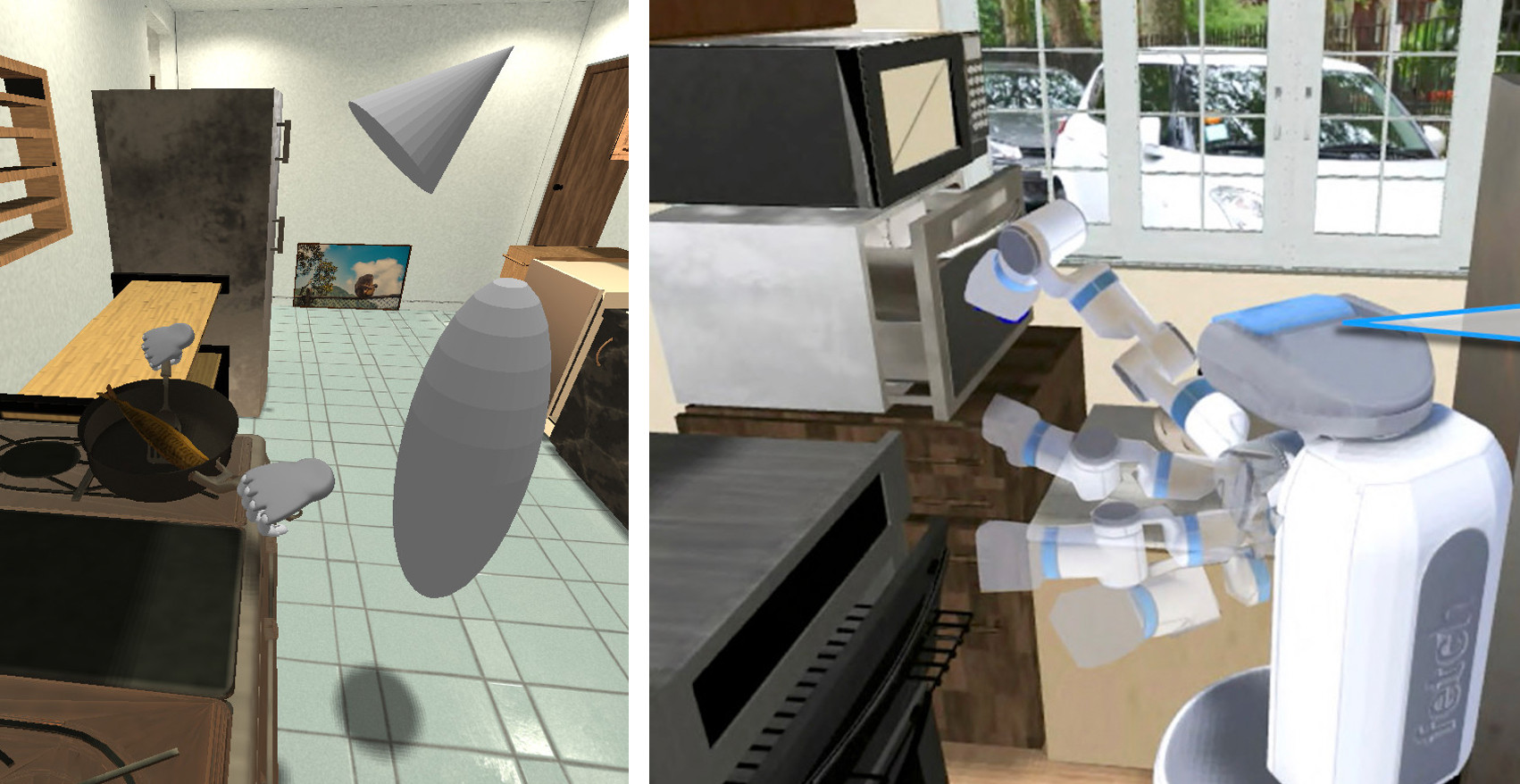

Embodiment

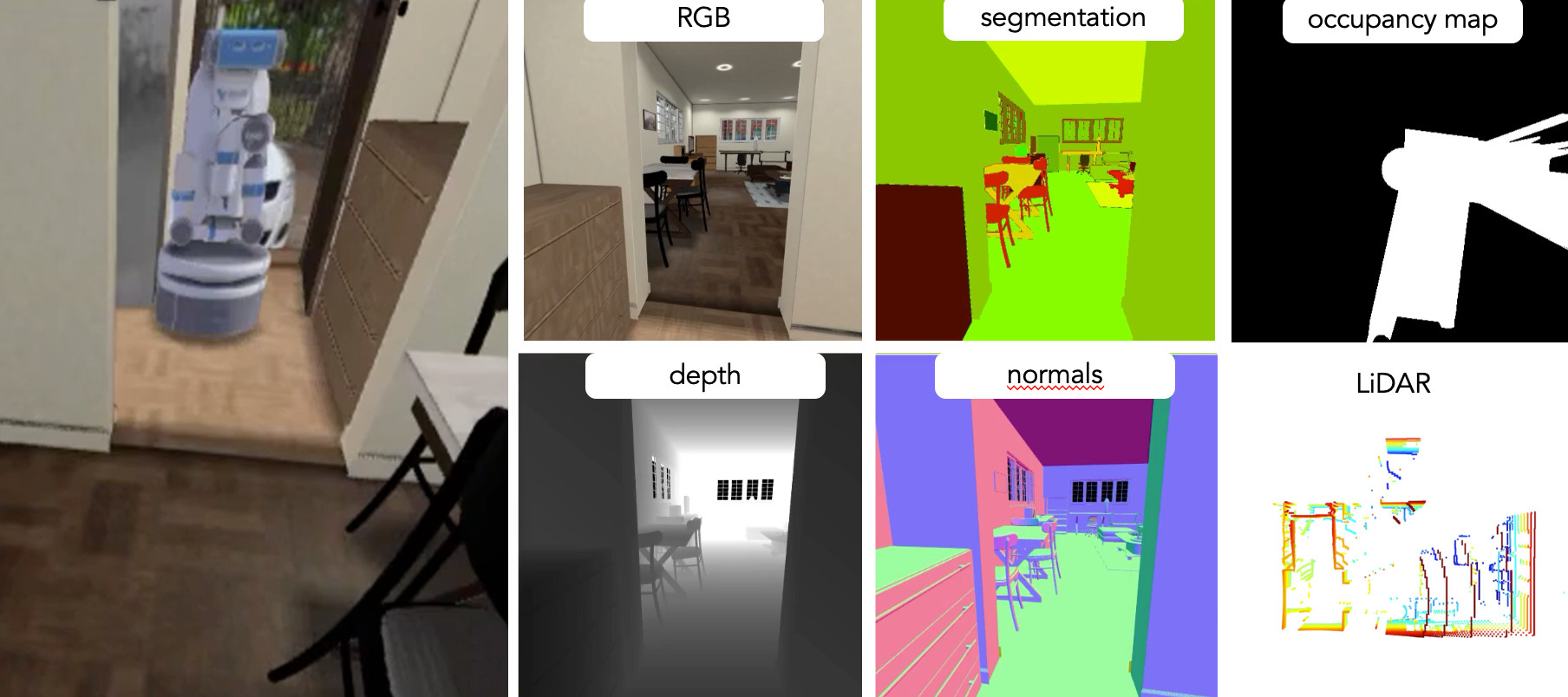

We provide three embodiments to evaluate your solutions in BEHAVIOR: a humanoid avatar used to collect human demonstrations in virtual reality, a Fetch mobile manipulator with one arm, and a Tiago bimanual mobile manipulator. You can also add your own robot!

Details of embodiment

Actuation

Agents in BEHAVIOR must change the state of the world controlling navigation and manipulation at 30 Hz. We provide interfaces to navigate based on linear and angular velocities, and to control the arm(s) either in Cartesian or joint space. We also provide a set action primitives to be used or extended.

Details of actuationSensing and Perception

BEHAVIOR activities require to understand the scene, plan a strategy, and execute it based on the virtual sensor signals generated by onboard sensors. This includes visual signals (RGB-D images, segmentation...), and proprioception.

Details of sensing

Benchmark Setups: Generalization to Unseen Conditions

Seen Scenes

The agent solves the activities in the same scene, objects and configuration that it trained with.

Unseen Object Poses

The agent solves the activities in the same scene and objects, but for different configurations that it trained with.

Unseen House Layout

The agent solves the activities in the same scene, but with different furniture pieces and objects.

More information about benchmarking setups in our documentation.